(Originally written and unpublished on March 24, 2016, updated, revised and finally published in 2018!)

Getting hired in the gaming industry OR Tips and Tricks for the job hunting Indie Game Designer/Developer.

Being the head honcho at a small game studio means I’m occasionally bombarded with e-mails from people looking for a job or an internship at my company. I’ve seen some good and a lot of bad so I figured I might as well share with you folks what goes through my head when I get resumes and applications to help you improve your odds at landing a job in the biz.

Whether you’re a student trying to land an internship or a seasoned vet jumping ship, these are things to keep in mind.

Have an online presence

Whether it’s LinkedIn, Twitter, DeviantArt or your own web site, have some sort of online presence that the folks looking at you can refer to. However, do keep in mind that the material on the web can help you and hinder you at the same time. So if you’ve posted something on Facebook that may make you look like a douche for some reason or other, make sure it’s private.

Most studios like mine don’t have dedicated HR people. Guys like me don’t use whatever fancy black magic tools HR people use. If you’re applying for a position I’m going to Google you. My usual search term I Google up is “<Your full name>+<Artist/Programmer> or <your school/company you work for>. Try Googling yourself, find anything? If you can find something, great, if you find nothing it’s not that bad.

Have a curated portfolio

This is really important for artists and by curated portfolio I don’t mean your DeviantArt page. Yes I did say have one in my previous section, that’s the fallback if I can’t find your portfolio (which should be part of your website. You do have one right?). What do I mean by curated portfolio? It means a showcase of only your *best work*.

This makes sense for artists in all capacities as well as for those looking for a in a non-art position. Showcase some of the best work you’ve done and make sure it’s polished.

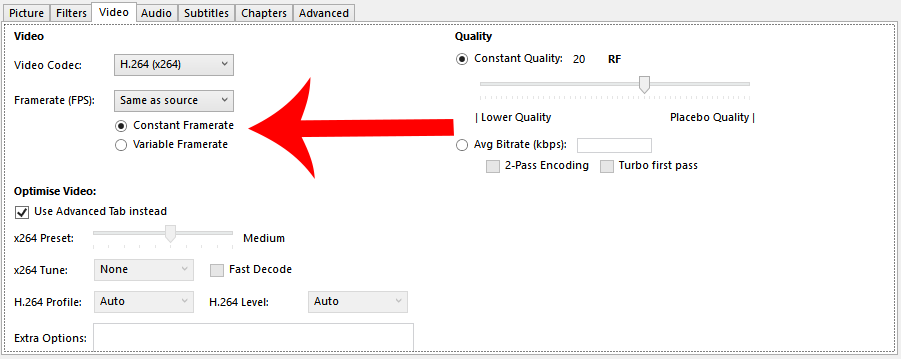

Have a demo reel

This seems pretty foreign to those who are not artists. But a demo reel is a great way for potential employers to get a quick 30-60 second preview of some of your best work. The demo reel could be just a slideshow of stills you’ve use or video footage of a game you’ve worked on but the important thing to keep in mind is that these are the things that highlight your skills.

Know what you want to do / Know your specializations

This is more applicable to the students trying to get into the biz. A lot of video game schools cater to the underachieving gamers out there which means most of the time, the skillsets taught are going to be rather wide with little to no specializations. That’s not a bad thing since it usually means students would come out fairly well rounded(if they actually put the effort into it). The downside is that that lack of specialization means that most graduates have no idea what they want to do or focus on other than “just make games”.

“Just because you didn’t specialize in something, doesn’t mean you’re a generalist”

It’s best to have an idea of what you’re interested. The most common ones would be game play programming, engine programming, graphics programming, network programming, tools programming, level design, lighting, character design or character modelling, environment design, audio/sound programming, etc..

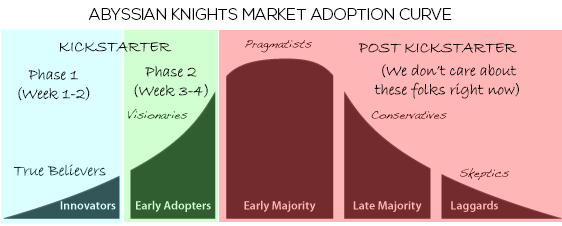

Game jams are ok, releasing something is better

Back in 2012 or 2013, I would have told any student trying to get into the video games industry to do as many game jams as they can get. That was then, now that we’re approaching the end of 2018, I would still suggest doing Game Jams but it would be better to focus on getting a small game released somewhere or at least get a couple of publishable prototypes done.

What’s changed you ask? Well for starters, unlike 2012, the market is hugely saturated with people wanting jobs in the field of game development and for the most part, their portfolios tend to be mostly filled with unpolished prototypes. You want to set yourself apart from that.

If you can’t actually release a game, put together a publishable prototype. This was a term I stole from Mark Czerny, the architect behind the PlayStation 4. This is basically a super polished prototype made to showcase what the final game *might* look like and to test out the mechanics. Think of it as an E3 demo for a game you’re working on.

Look into the details of your contract

This is something I warn a lot of students who want to get into the biz. Many companies (large and small) will have IP clauses in them stating that during the entire time of employment with the company, anything and everything you make or come up with is property of the company. That means that as long as you work for that company, any cool games you might have as side projects or even story ideas are property of the company. It’s pretty shitty and you can try to talk them out of it but for the most part, unless you’re super awesome at what you do and they really, really, really, really want you, odds are you’re going to be given a take it or leave it situation.

Maybe one day game companies will learn from the engineering firms and add the following line to their contracts

“for the purposes of <whatever section in reference> and hereof, the terms Conceptions, Inventions and Copyrights shall mean only those Conceptions, Inventions and Copyrights that the employee may make alone or in conjunction with others during the period and arising out of his/her engagement with the company.”

Understand NDA’s

If you’re going to work in the video game field, you really need to know what NDA’s are and what they mean. Unless it’s written in the contract that you can mention something about the project after a set period of time (usually when the game is released), that usually means YOU CANNOT TALK ABOUT THE PROJECT EVEN AFTER IT’S RELEASED.

Artists and Art Style

Quite often I get portfolios from artists who create superb work but they seem to be stuck in one particular art style. While there’s nothing wrong with developing a particular art style, unless you plan on getting a character design job, there’s a high chance you’ll be asked to follow a particular art style prescribed by your art director.

My suggestion is to not only showcase things in your portfolio of your own art style but also in other art styles as well. This is a typically a large reason why I turn down many fantastic artists because the prescribed art style for our projects are typically very anime inspired, largely because we usually get actual artists from the anime biz in Japan to do the designs, and for the most part, a lot of folks here can’t quite hit it.

For those stubborn die-hard folks out there that are adamant about sticking to their art style and looking into the character design job. The reality is that the character design jobs are few and far between, as someone trying to get into the industry, your better off being able to do more than just character design.

Render things in engine

If you’re a 3D artist planning on getting a job in the video game industry, showcase renderings of your work running in engine. It doesn’t matter if it’s Unity, Unreal Engine, CryEngine/Lumberyard or some other 3D game engine. From my own experience and friends of mine from the industry who have to review 3D artist work, seeing a nicely rendered model in a demo reel captured as in-engine footage lands you much bigger points than just putting together a nice sculpt in ZBrush or something.

The reason behind this is that there are several steps required to get a really nice looking sculpt from Zbrush into a game engine without boggling it down. This is primarily because models straight out of Zbrush or similar sculpting tool are completely unusable in engine as the poly count is usually waaaaay too high. If you can demonstrate that you can not only make nice 3D models but also reduce the poly count while keeping it looking good, you’re in a much better situation to be hired.

There is no such thing as the “ideas guy”

Ok maybe there is, but it’s usually tied to another position. The closest would be a pure game designer role but to be honest, unless you can bring lots of $$$$ to the table, no one is going to hire you purely as an ideas guy. Understand that.

Can’t program and can’t do art? Don’t worry

While for the most part, gaming jobs focus on programming or some sort of artistic work, there are also jobs outside of those roles. Some of these roles include, producer, scenario writer, motion capture actor/director/technician, QA Tester(Game Tester) and a whole slew of roles in marketing and PR like community manager or social media manager.

These roles however are harder to find in smaller studios because realistically, those roles tend to be handled by everyone there because there’s no budget for those roles specifically.

Avoid Programmer Art whenever possible

Programmers, the fugly programmer art is *only good* for internal prototypes. If you’re a student in a game development program and your primary goal is to handle the programming in games, work with an artist to get you some polished artwork, buy some or just “borrow” some if you have to. Even though your work is primarily on the programming side, the people who will be looking at your portfolio will most likely be very visual people.

If you can’t get artwork that looks good at least make it less distracting. Stick with blocks or stock assets that come with the engine. Most of the “free” assets in marketplaces tend not to look that good or look out of place in a sparsely put together environment.

You’ll need more than Passion & Talent

Passion and Talent can only get you so far, you need a solid work ethic and a willingness to get the job done even if it’s doing something you don’t like.

In fact, sometimes we hire people who aren’t quite as passionate or talented simply because we know they’re willing to get the job done even though it’s not what they want to do. Nothing is worse than hiring someone who thinks they’re a rock star and half asses stuff because it’s not “their way” or because “their idea” got rejected.

Your ideas and opinions are great but sometimes the project is locked in place. You’re probably going to have to revise and update the same bits of code and artwork over and over and over until you have nightmares, so do yourself a favour and drop the ego at the door.

Making games is rewarding when it’s done but for the majority of the process, it might suck and suck hard because making video games is NOT EASY.

Don’t give up!

This is a big one. The one big reality check most students have when they graduate out of gaming school is that it’s not exactly easy to get a job making games. You could be the best in your class or the best in your school but it doesn’t necessarily mean you’re going to be the top 5% of the industry. In addition to all the other graduates that flood the market every year, many also forget that they’re competing for positions with industry vets that just lost their jobs.

At the time of writing both Capcom and Bandai Namco out in Vancouver shut down their studio (among others). All the people that lost their jobs there are probably looking at the same gameplay programmer or character modelling job you’re looking at.

It’s super discouraging to graduate at the top of your class only to find yourself struggling to find a job, but don’t give up, try another company. Larger companies such as EA, Ubisoft and Square Enix are going to have the toughest competition because everyone wants to work on the next Battlefield, Assassins Creed or Final Fantasy game. If you can’t cut it there (and don’t worry, many won’t), take a look at smaller indie companies or go do some game jams and see if you can start a project with someone there.

Keep developing your skills

Do yourself a favor and keep your skills up to date. Just because they didn’t teach you something in school doesn’t mean you can’t learn it on your own.

If there’s any upcoming technology that might be hot, get on it.

VR, Unity, Unreal, mobile, whatever.

Every once in a while there’s a rush in the industry to hire people who have experience in some new hot tech. It used to be mobile development for iOS and Android, then it was Virtual Reality and Unity. Keeping your skills up to date is especially important if you’re looking for a job. I pretty much got contacted by one or two people each and every week for a few months because everyone was looking to get into VR development and I happened to have some experience screwing around with the original Oculus Rift DK1 back in the day.